반응형

6.1 K-Means

Dataset

iris = sns.load_dataset('iris')

iris.head()

iris = iris.drop('species',axis=1)

df_kmeans = StandardScaler().fit_transform(iris)

df_kmeans = pd.DataFrame(df_kmeans)

df_kmeans.columns = iris.columns.values

print(df_kmeans.shape)

df_kmeans.head()

Cluster & fit

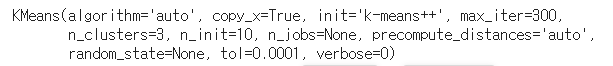

kmeans = KMeans(n_clusters=3)

kmeans.fit(df_kmeans)

Check labels & Predict

print(kmeans.labels_.shape)

print(kmeans.predict(df_kmeans).shape)

df_kmeans['cluster'] = pd.DataFrame(kmeans.labels_)

df_kmeans

Accuracy

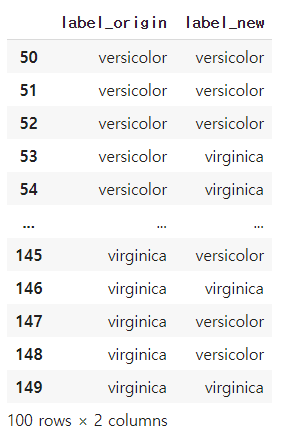

label_new = kmeans.labels_

acc = pd.DataFrame(label_new)

acc = pd.concat([label, acc], axis = 1)

acc.columns = ['label_origin','label_new']

acc.label_new = acc.label_new.astype(str)

acc.label_new = acc.label_new.replace('1','setosa')

acc.label_new = acc.label_new.replace('2','virginica')

acc.label_new = acc.label_new.replace('0','versicolor')

acc.tail(100)

same = 0

total = 0

for origin, new in zip(acc['label_origin'],acc['label_new']) :

if origin == new :

same += 1

total += 1

else :

total += 1

continue

accuracy = same/total

print('accuracy : ',accuracy)

#adjusted_rand_score(label1['label'],AC_cluster_labels)

Elbow Method

# Elbow method

from sklearn.cluster import KMeans

square_distance = []

for n_k in range(1, 40) :

num = KMeans(n_clusters = n_k)

num = num.fit(points)

square_distance.append(num.inertia_)

plt.figure(figsize=(15,6))

plt.gca().set_facecolor('#333333')

plt.xlim(0, 40)

plt.xticks(ticks=np.arange(0, 40, step=1))

plt.xlabel('Number of K')

plt.ylabel('Sum of squared distances')

plt.annotate('←This is Elbow',(4.5,26),fontsize = 18, color = 'yellow')

plt.title('Elbow method to find optimal K',fontsize=22)

plt.grid()

plt.plot(range(1, 40), square_distance, 'o-',color='yellow')

plt.show();

Silhouette Method

from sklearn.metrics import silhouette_score

sil = []

for k in range(2, 40):

kmeans = KMeans(n_clusters = k).fit(np.array(points))

labels = kmeans.labels_

sil.append(silhouette_score(np.array(points), labels, metric = 'euclidean'))

plt.figure(figsize=(15,6))

plt.gca().set_facecolor('#333333')

plt.xticks(ticks=np.arange(2, 40, step=1))

plt.xlabel('Number of K')

plt.xlim(1,40)

plt.ylabel('Silhouette_score')

plt.title('Silhouette method to find optimal K',fontsize=22)

plt.annotate('←The Highest Value',(4.5,0.695),fontsize = 18, color = 'yellow')

plt.grid()

plt.plot(range(2, 40), sil, 'o-',color='yellow')

plt.show();

Eyeball Method

Visualizition with PCA (pc=2)

from sklearn.cluster import KMeans

fig, axes = plt.subplots(1, 2, figsize=(20,8))

df_pca = finalDataFrame[['component 1','component 2']]

K_cluster = KMeans(n_clusters=3).fit_predict(df_pca)

axes[0].scatter(df_pca['component 1'],df_pca['component 2'],

c = K_cluster ,cmap = 'rainbow')

axes[0].set_title("K-Means with PCA",fontsize=20)

axes[1].set_title("Original Data",fontsize=20)

sns.scatterplot(x=finalDataFrame['component 1'],

y=finalDataFrame['component 2'],

hue = 'species',

data = finalDataFrame)

plt.show()

6.2 Hierarchical

Dataset

import scipy.cluster.hierarchy as shc

from sklearn.cluster import AgglomerativeClustering as AC

import seaborn as sns

from sklearn.cluster import AgglomerativeClustering

iris = sns.load_dataset('iris')

iris.head()

iris = iris.drop('species',axis=1)

iris.head(3)

Cluster & fit

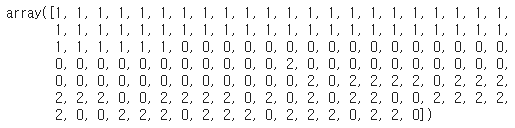

AC_cluster = AC(n_clusters=3).fit_predict(iris)

AC_cluster

Visualization

import scipy.cluster.hierarchy as shc

from sklearn.cluster import AgglomerativeClustering as AC

import seaborn as sns

from sklearn.cluster import AgglomerativeClustering

hierarchical_list = [ ]

for one, two in zip(df_pca['component 1'],df_pca['component 2']) :

hierarchical_list.append([one,two])

plt.figure(figsize=(14, 8))

plt.axhline(y=10, color='purple', linestyle=':', linewidth=7)

plt.text(14,12,'3 Cluster',fontsize=25,c = 'purple')

plt.title("hierarchical clustering",fontsize=20)

d = shc.dendrogram(shc.linkage(hierarchical_list, method='ward'))

Visualization with PCA (pc=2)

cluster = AC(n_clusters=3, affinity='euclidean', linkage='ward')

cluster_label = cluster.fit_predict(hierarchical_list)

fig, axes = plt.subplots(1, 3, figsize=(20,8))

df_pca = finalDataFrame[['component 1','component 2']]

K_cluster = KMeans(n_clusters=3).fit_predict(df_pca)

axes[0].set_title("K-Means clustering\nAccuracy : {:.2f}".format(adjusted_rand_score(label1['label'],K_cluster)),fontsize=20)

axes[0].scatter(df_pca['component 1'],df_pca['component 2'],

c = K_cluster ,cmap = 'rainbow')

colors = {'setosa':'r', 'virginica':'g', 'versicolor':'b'}

axes[1].scatter(df_pca['component 1'],df_pca['component 2'], c = label1['label'].apply(lambda x : colors[x]) )

axes[1].set_title("Original Data\nLABEL",fontsize=20)

axes[2].scatter(df_pca['component 1'],df_pca['component 2'],

c = cluster_label ,cmap = 'rainbow')

axes[2].set_title("Agglomerative Clustering\nAccuracy : {:.2f}".format(adjusted_rand_score(label1['label'],AC_cluster_labels)),fontsize=20)

plt.show()

6.3 K-Means & Hierarchical with T-SNE

from sklearn.manifold import TSNE as TS

from sklearn.metrics.cluster import adjusted_rand_score

import sklearn.metrics as metrics

df_tsne = StandardScaler().fit_transform(iris)

df_tsne = pd.DataFrame(df_tsne)

df_tsne.columns = iris.columns.values

model = TS(learning_rate=500)

transformed = model.fit_transform(df_tsne)

xs = transformed[:,0]

ys = transformed[:,1]

df_tsne = pd.DataFrame(transformed)

df_tsne.columns = ['tsne1','tsne2']

label1 = pd.DataFrame(label)

label1.columns = ['label']

fig, axes = plt.subplots(1, 3, figsize=(20,8))

K_cluster = KMeans(n_clusters=3).fit_predict(transformed)

axes[0].scatter(df_tsne['tsne1'],df_tsne['tsne2'], c = K_cluster ,cmap = 'rainbow')

axes[0].set_title("K-means Clustering\nAccuracy : {:.2f}".format(adjusted_rand_score(label1['label'],K_cluster)),fontsize=20)

AC_cluster = AC(n_clusters=3).fit_predict(transformed)

AC_cluster_labels = AC_cluster

axes[2].scatter(df_tsne['tsne1'],df_tsne['tsne2'], c = AC_cluster ,cmap = 'rainbow_r')

axes[2].set_title("Agglomerative Clustering\nAccuracy : {:.2f}".format(adjusted_rand_score(label1['label'],AC_cluster_labels)),fontsize=20)

colors = {'setosa':'r', 'virginica':'g', 'versicolor':'b'}

axes[1].scatter(df_tsne['tsne1'],df_tsne['tsne2'], c = label1['label'].apply(lambda x : colors[x]) )

axes[1].set_title("Original Data\nLABEL",fontsize=20)

axes[0].grid()

axes[1].grid()

axes[2].grid()

plt.show()

반응형

'기본소양 > 선형대수학' 카테고리의 다른 글

| 파이썬으로 하는 선형대수학 (Colab Tutorial) (0) | 2021.01.19 |

|---|---|

| 파이썬으로 하는 선형대수학 (5. Dimensionality Reduction) (0) | 2021.01.19 |

| 파이썬으로 하는 선형대수학 (4. 공분산과 상관계수) (0) | 2021.01.18 |

| 파이썬으로 하는 선형대수학 (3. Span, Rank, Basis, Projection) (0) | 2021.01.18 |

| 파이썬으로 하는 선형대수학 (1. 스칼라와 벡터 & 2. 매트릭스) (0) | 2021.01.18 |

댓글